send link to app

Nearspeak app for iPhone and iPad

Nearspeak provides contextual acoustic information for visually impaired people.

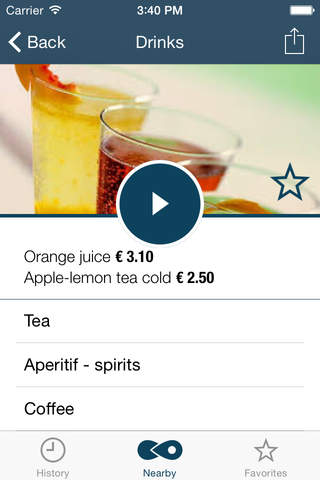

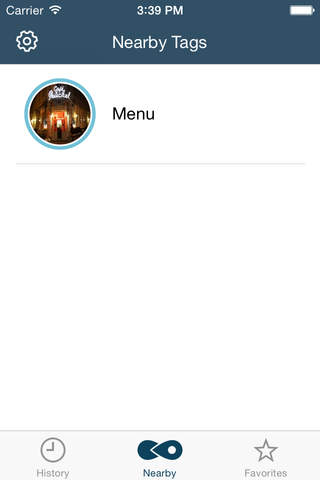

Many situations are for people with impaired vision a major challenge: whats on the menu? When and where is the next train?

A lot of information are visual only available today. With Nearspeak this data can be mediated by language.

Nearspeak was designed, developed and tested with the help of the blind and visually impaired community. It uses information about the accessibility of VoiceOver and has a simple high-contrast surface for visually impaired users.

It uses proximity sensors for more precise localization and information processing.

Supports Apple Watch.